RT

September 4, 2021

-RT

“We apologize to anyone who may have seen these offensive recommendations,” Facebook said in a statement to the media, adding that the entire topic recommendation feature has been disabled.

As we have said, while we have made improvements to our AI, we know it’s not perfect, and we have more progress to make.

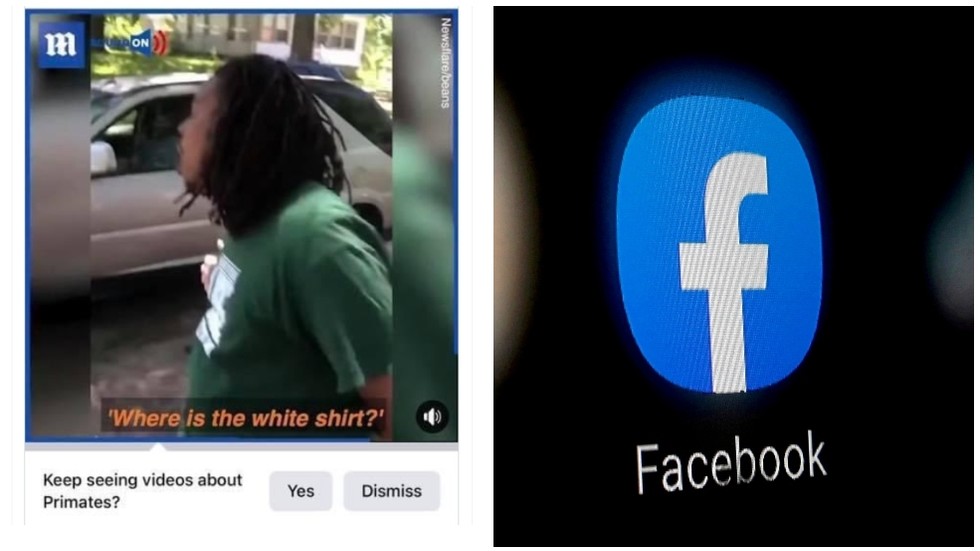

Darci Groves, a former content design manager at Facebook, said a friend sent her a screenshot of a video featuring black men, which included the company’s auto-generated prompt asking viewers if they wanted to “keep seeing videos about primates.”

Um. This “keep seeing” prompt is unacceptable, @Facebook. And despite the video being more than a year old, a friend got this prompt yesterday. Friends at FB, please escalate. This is egregious. pic.twitter.com/vEHdnvF8ui

— Darci Groves (@tweetsbydarci) September 2, 2021

The video, uploaded by UK tabloid the Daily Mail in June 2020, contained clips of two separate incidents in the US – one of a group of black men arguing with a white individual on a road in Connecticut, and one of several black men arguing with white police officers in Indiana before getting detained.

According to The New York Times, Groves posted the screenshot in question to a product feedback forum for current and former Facebook employees. A product manager then called the recommendation “unacceptable” and promised to investigate the incident.

Last year, Facebook formed a team at Instagram to study how different minority users are affected by algorithms. The move was made after the social media giant was criticized for overlooking racial bias on its platforms.

Algorithms used by tech giants have come under fire for embarrassing mistakes in the past. In 2015, Google apologized after its Photos app labeled a picture of black people “gorillas.”